Each and every second, businesses flood with information. Learning to draw meaningful information using a wide range of sources has now turned into a very important factor to remain competitive. The extraction of particular information in structured, semi-structured, or unstructured sources and converting it into a form of use is called data extraction.

This skill can be used to transform the way your organization does its business, whether you are analysing customer behaviour, tracking the market trends or automating the business processes.

This is a complete reference that will take you through all the information you need to know about how to extract valuable information out of the various sources, the various methods that can be employed, and the available solutions which can be used to ease up your work flow.

Listen To The Podcast Now!

What Is Data Extraction?

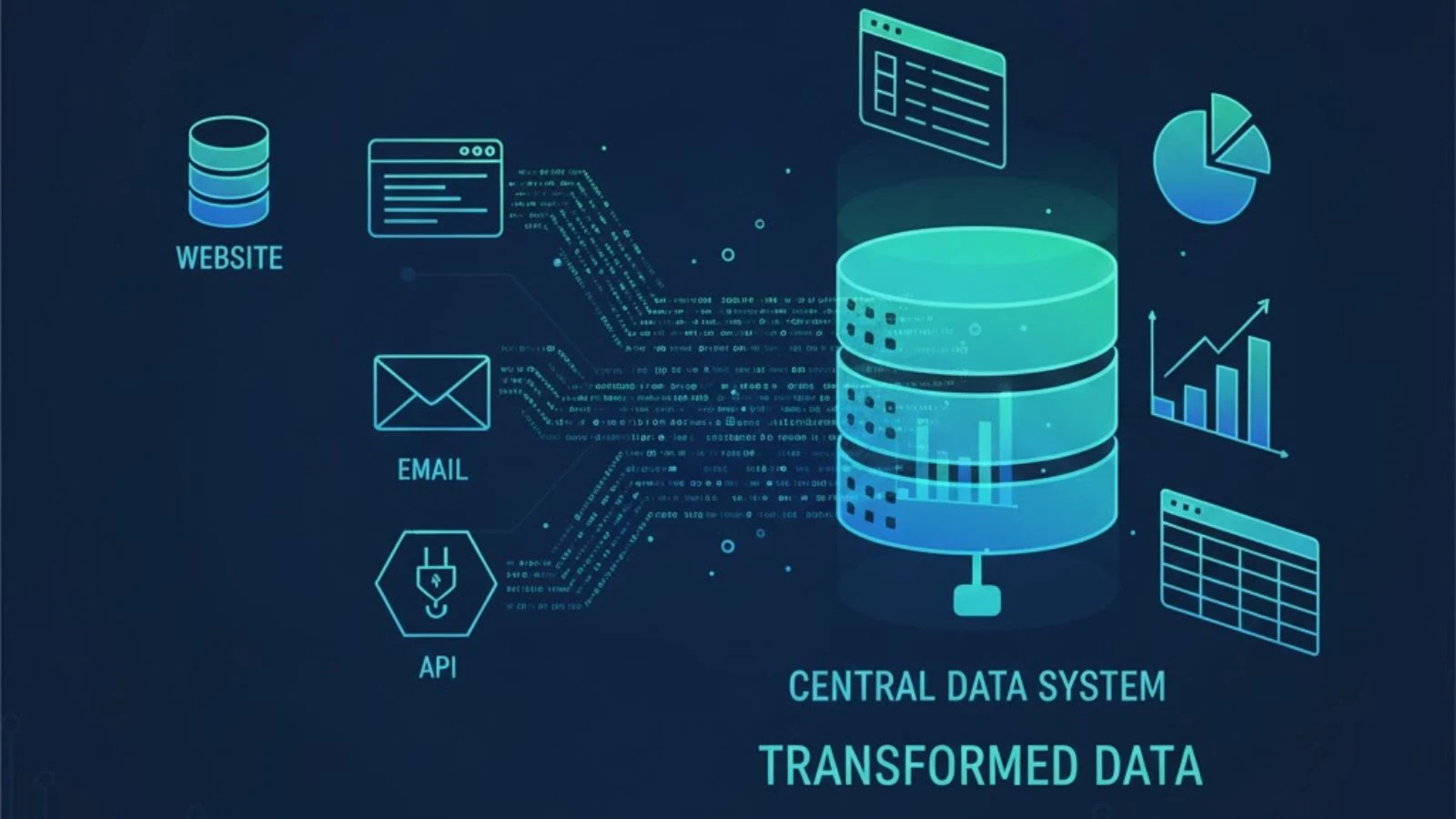

Simply stated, what is data extraction? It is the logical mechanism of locating and retrieving the right information from different sources such as databases, websites, documents, emails, and APIs, making it a foundational concept in Big Data which Everyone Must Know.

Unlike basic copy-pasting, data extraction is an intelligent process. It involves parsing, pattern recognition, and transforming raw, unstructured information into meaningful formats like spreadsheets, databases, or JSON files that systems can actually work with.

The extracted data is then used across critical functions: business intelligence, market research, lead generation, price monitoring, academic research, and compliance reporting. This is how organizations move away from gut-driven decisions and outdated reports, and instead operate on accurate, timely, and actionable information.

Core Data Extraction Techniques:

Understanding different methodologies helps you choose the right approach for your specific needs.

1. Manual Extraction:

The most basic technique involves humans manually copying information from sources and entering it into target systems. While this method offers high accuracy for small datasets, it’s time-consuming, prone to human error, and impossible to scale for large volumes of information.

2. Automated Extraction Using Scripts:

Programming languages like Python, JavaScript, and R enable developers to write custom scripts that automatically retrieve information from various sources. These scripts can parse HTML, process CSV files, query databases, and interact with APIs. This approach offers flexibility and customization but requires technical expertise and ongoing maintenance.

3. Template-Based Extraction:

This technique uses predefined templates or patterns to identify and extract specific fields from standardized documents. Invoice processing, receipt scanning, and form processing commonly use template-based methods. The limitation is that templates work best with consistent document formats.

4. AI-Powered Extraction:

Modern artificial intelligence and machine learning algorithms can understand context, recognize patterns, and extract information from unstructured sources with minimal human intervention. These intelligent systems learn from examples and continuously improve their accuracy over time.

Essential Data Extraction Tools:

The market offers numerous solutions, each designed for different use cases and skill levels.

1. Web Scraping Tools:

Data extraction tools specifically designed for web scraping include solutions like Beautiful Soup, Scrappy, and Selenium. These allow users to navigate websites, interact with dynamic content, and extract information from HTML structures. Some tools offer visual interfaces where users can point and click on elements they want to extract, making the process accessible to non-programmers.

2. API-Based Tools:

Many modern platforms provide Application Programming Interfaces (APIs) that allow structured access to their information. Tools like Postman, Insomnia, and custom API clients help developers efficiently retrieve information through these official channels, ensuring reliability and compliance with platform policies.

3. Database Extraction Tools:

SQL-based tools and ETL (Extract, Transform, Load) platforms like Apache NiFi, Talend, and Microsoft SSIS specialize in extracting information from relational databases, data warehouses, and enterprise systems. These solutions handle complex queries, joins, and transformations at scale.

4. Document Processing Tools:

OCR (Optical Character Recognition) software and document intelligence platforms extract text and information from PDFs, scanned images, and physical documents. These tools have become increasingly sophisticated, handling multiple languages, handwriting, and complex layouts.

Popular Data Extraction Software Solutions:

1. Open-Source Options:

Data extraction software in the open-source category includes powerful frameworks like Apache Airflow for workflow orchestration, Pandas for information manipulation, and various web scraping libraries. These solutions offer maximum flexibility and zero licensing costs but require technical expertise to implement and maintain.

2. Commercial Platforms:

Enterprise-grade platforms provide user-friendly interfaces, managed infrastructure, and professional support. Solutions in this category offer features like scheduling, monitoring, error handling, and team collaboration. While they come with subscription costs, they significantly reduce development time and technical overhead.

3. Cloud-Based Services:

Cloud platforms offer scalable extraction capabilities without infrastructure management. These services handle everything from simple web scraping to complex document processing, charging based on usage rather than fixed licenses.

Best Practices for Effective Data Extraction:

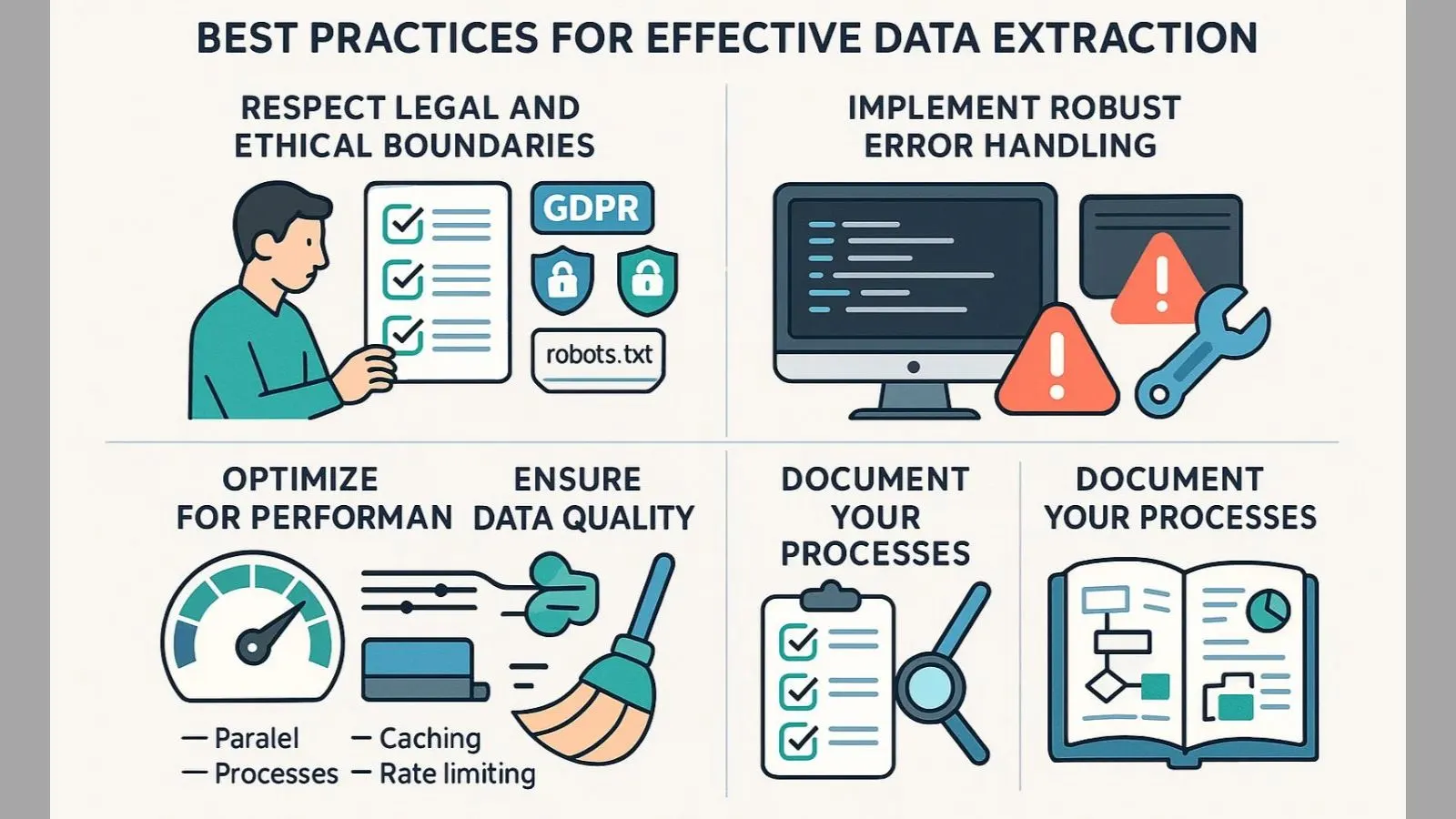

1. Respect Legal and Ethical Boundaries:

Always review terms of service, privacy policies, and applicable laws before extracting information from any source. Obtain necessary permissions, respect robots.txt files for web scraping, and comply with regulations like GDPR when handling personal information.

2. Implement Robust Error Handling:

Sources change frequently, websites redesign their layouts, APIs update their endpoints, and databases modify their schemas. Build error detection, logging, and alerting into your extraction processes to quickly identify and resolve issues.

3. Optimize for Performance:

Efficient extraction minimizes resource usage and reduces costs. Implement rate limiting to avoid overwhelming source systems, use caching to prevent redundant requests, and parallelize operations when appropriate to speed up processing.

4. Ensure Data Quality:

Extracted information is only valuable if it’s accurate and reliable. Implement validation rules, remove duplicates, handle missing values appropriately, and regularly audit output quality to maintain high standards.

5. Document Your Processes:

Maintain clear documentation of extraction logic, field mappings, transformation rules, and dependencies. This ensures team members can understand, maintain, and improve extraction workflows over time.

Also Read:

How Globussoft Delivers Data-Driven Digital Solutions?

Globussoft delivers data-driven digital solutions by combining AI intelligence, advanced development expertise, and scalable SaaS technologies to solve real business challenges. The approach starts with collecting accurate, relevant data using smart data extraction and analytics frameworks. This data is then processed through AI-powered models to uncover meaningful patterns and insights.

These insights guide the development of customized digital solutions, ranging from AI-driven platforms and SaaS products to automation tools and enterprise applications. Each solution is designed to be scalable, secure, and aligned with business objectives, ensuring decisions are backed by reliable data and measurable outcomes rather than assumptions.

Common Use Cases and Applications:

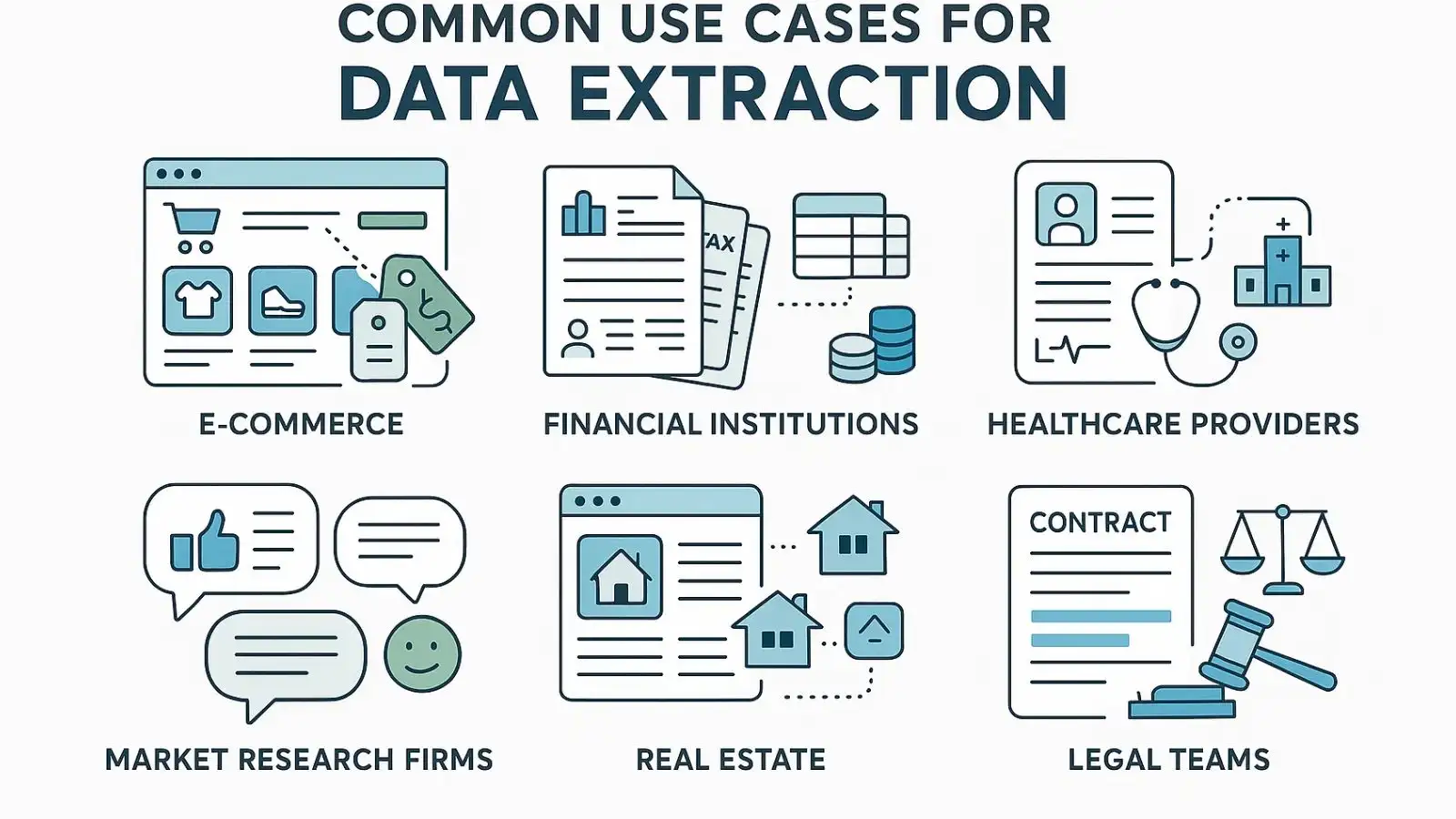

In different industries, organizations are using extraction capacities in various ways. Through the use of e-commerce businesses track the pricing of competitors by deriving product details in the various sites.

Bank statements, tax returns and employment verification papers contain information that is extracted by the financial institutions to process loan applications. Medical records are analysed and reported on by the healthcare providers to obtain patient information.

The market research companies obtain consumer mood by mining reviews, social media posts, and forum messages.

Real estate firms pool the property listing of various sources to give them comprehensive market perspectives. During due diligence processes, legal teams obtain applicable clauses in contracts.

Conclusion:

The ability to art and science of accessing information in different sources is what enables organizations to make superior decisions, mechanize repetitive tasks and strive to find very useful information in their information repositories.

With the knowledge of the tools and techniques, and the adoption of best practices and proper selection of tools, you will be able to develop robust extraction workflows that will provide reliable and scalable results. The trick here is begin with clear goals, select the solutions best suited to your technical and business requirements and keep on optimizing your strategy as the results are realized.

Regardless of the choice of individual development, open-source and frameworks, as well as the advanced platforms such as the GlobussoftAI, the investment in the appropriate extraction possibilities is rewarded by higher efficiency and greater business performance.

FAQ’s:

Q1: What’s the difference between data extraction and web scraping?

Ans: Web scraping is a specific type of extraction focused on retrieving information from websites. Extraction is the broader term that includes gathering information from databases, documents, APIs, and other sources beyond just websites.

Q2: Do I need programming skills for data extraction?

Ans: Not necessarily. While programming skills help for complex custom solutions, many modern tools offer visual interfaces that allow non-technical users to extract information without writing code.

Q3: Is data extraction legal?

Ans: Legality depends on the source, method, and purpose. Always review terms of service, respect intellectual property rights, and comply with privacy regulations. Public information extraction for legitimate purposes is generally acceptable, but each situation requires careful evaluation.